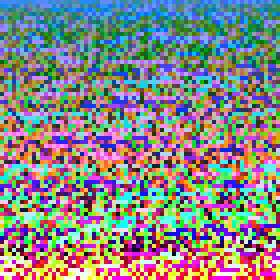

So which color space is best.

Using a list of 9413 0 <= R,G,B <= 255, Step 15 colors (17³). And the orange from the Blogger icon. I sorted a bunch of 4x4 boxes for a large variety of color spaces. The diagrams are read left to right top to bottom. The first color is the index color. And the point is to have the colors very similar to that color closer to it. And try to avoid having any colors close to that color further away or very dissimilar colors further. This is a bit hard to figure out but you can generally eyeball it. The top should be the matching colors. You shouldn't have very similar colors to that strewn about the diagram.

The boxes are 70x70 which is 1400, - 1 for the index color. This means 14 of the most different colors will not be shown.

Addative color. Don't think Euclidean is the simplest distance. I literally took the value distances of the R, G, B, added them up and called that a distance.

Euclidean Distance RGB

Sqrt(R² + G³ + B²)

Redmean

long rmean = ( (long)r1 + (long)r2 ) / 2;

long r = (long)r1 - (long)r2;

long g = (long)g1 - (long)g2;

long b = (long)b1 - (long)b2;

return Math.sqrt((((512+rmean)*r*r)>>8) + 4*g*g + (((767-rmean)*b*b)>>8));

Given at:

http://www.compuphase.com/cmetric.htm

Which is actually a pretty great article.

Luv, Standard Euclidean

The Redmean page says it gives pretty close to Luv colorspace with much less programming power needed. So here's Luv. Luminescence u, v.

Lab, Delta E (standard Euclidean distance)

sqrt(L² + a² + b²)

Lab, Delta 94

Same Lab color space, but rather than a standard Euclidean Color distance the good folks at CIE in 1994 decided to go ahead and tweak it a bit.

Lab, Delta E 2000, CIEDE2000

Using the reworked tweaked distance formula from 2000. I actually like quite a lot. It's my personal favorite and seems to give very consistent results. And consistently good results.

Lab Delta CMC

Intended for threads, paints, dyes, etc. It's the standard for that industry. Apparently you're much better off with a color to dye something than no color. Which is maybe why everything with no color is basically at the bottom, maximally far away from colors with saturation.

Hunter Lab, it uses the XYZ color space (which LAB also uses) and uses a different way to get the L,a,b values. Which are luminescence and some complementary colors.

HSL. You can't just plop this into a Euclidean distance formula you need to make a shape and get the distance within that shape. Anything with a hue, must have this done. Because H=360° and H=0° are not polar opposites, they are the same color. They should be in the same place not 360² away from each other.

In this case, I have some old code and it's wrapped up in a two stuck together half cone. Which is to say that it's a cone where the L value ends half way at the cone, and has maximal colors at L = 0.5.

HSB/HSV

Using value rather than lightness Placed into a cone.

double X = S*V*Math.cos(H);

double Y = S*V*Math.sin(H);

double Z = V;

Directly invokes the HSB routine from Java's Color.RGBtoHSV() routine.

HCL: M. Sarifuddin and Rokia Missaoui

I just wrote a very long article dissing this color space. Mostly because it's ill defined and claims to be better than it is through methodological flaws. And I dunno what else.

Weighted Euclidean: 22,43,35

About this time last year, I wrote

a blog post where I ran all the numbers for Delta E, Lab. And averaged the distances based on the specific colors and came up with these weights. Also noting

the Compuphase paper got similar results. There are standard given weights but these are actually for Gamma. They are the weights as to how much that color contributes to how bright the color looks. 30,59,11 are correct weights here, blue contributes very little to how bright a color seems. In fact, anti-blue (yellow) is wildly bright like bizarrely so. You can't even read yellow text on a white background.

Weighted Euclidean: 30,59,11

We're dealing with Orange (specifically Blogger Icon Orange). So the huge over emphasis on red is going to make it cluster like this but, let's check out a couple other colors with similar weights.

Weighted Euclidean: 22,43,35

Weighted Euclidean: 30,59,11

Weighted Euclidean: 22,43,35

Weighted Euclidean: 30,59,11

The weighting here is very anti-blue. As a result bluish colors get tossed all over the place.

The blue here is Google Icon blue (66,133,244).

Lab Delta 2000, Green

For Reference.

Lab Delta 2000, Google Blue

For Reference

Hopefully we should have a better idea about color spaces and which ones may or may not be good or bad. And a pretty firm grasp on the weighted Euclidean weights for color distance being wrong. Those are fine weight when we're looking for gamma, but how bright we see colors is different than how discriminating we are between colors. You are much better with 2,4,3 than that 30,59,11 crap. Or a much better color distance formula.